Amazon AWS outage in April explained

The Amazon AWS outage on April 21st, 2011 brought down many popular sites including Reddit, Foursquare, HootSuite, Quora and Buddy Events app for Facebook. There’s been many blogs and web sites with write ups analysing the reasons why the Amazon AWS outage occurred but I came across what I consider the best summary of why Amazon AWS outage happened over at Rightscale.com’s blog. Definitely, worth a read as it’s the best explanation I have read to date and there’s also a lessons learnt article here as well. For a more technical explanation check out Amazon AWS message as well as article by Cloud Computing Today.

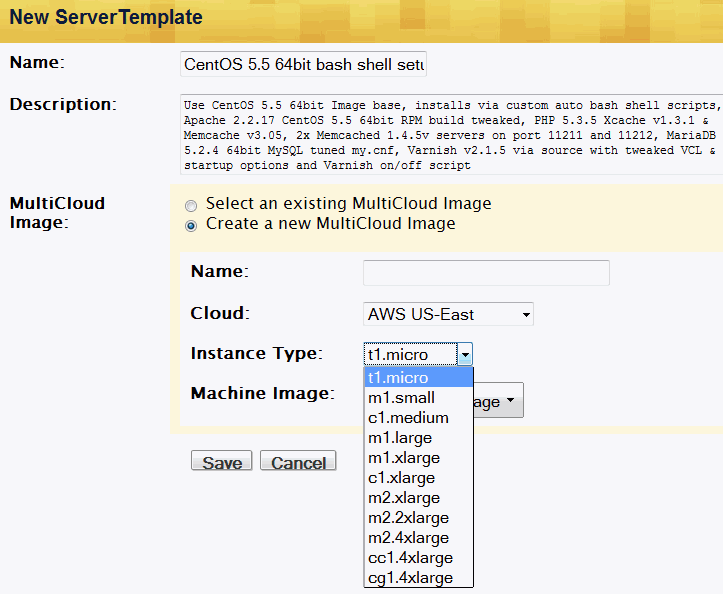

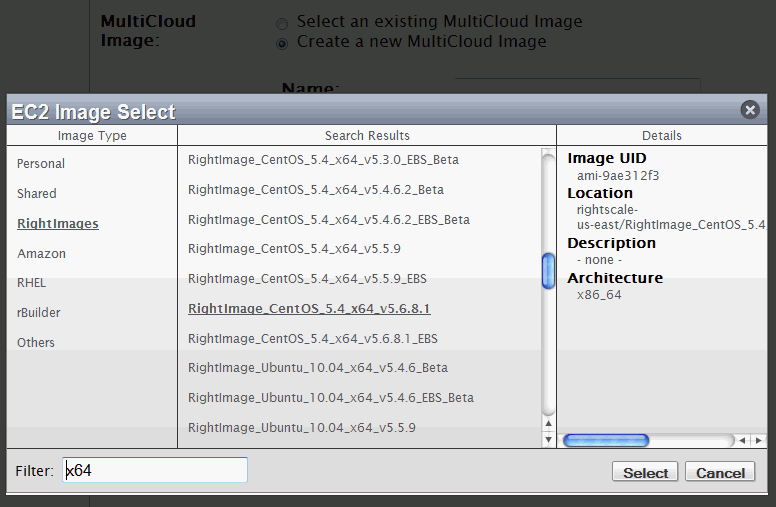

Rightscale.com is a cloud management platform service which allows you to manage and scale a larger number of cloud servers with cloud hosting providers services from one online user dashboard console interface. Some of the most trafficked web properties online also use Rightscale.com services including Zynga and Playfish. I also use Rightscale.com with my Amazon EC2, Rackspace Cloud Server and GoGrid server accounts. Lucky for me, none of my Amazon EC2 instances were affected.

The outage was triggered by an operator error during a router upgrade which funneled very high-volume network traffic into a low-bandwidth control network used by EBS (Elastic Block Store). The resulting flooding of the control network caused a large number of EBS servers to be effectively isolated from one another, which broke the volume replication, and caused these servers to start re-replicating the data to fresh servers. This large-scale re-replication storm in turn had two effects: it failed in many cases causing the volumes to go offline for manual intervention, and it flooded the EBS control plane with re-replication events that affected its operation across the entire us-east region.

Further reading here.

Example of one of my Rightscale Amazon EC2 instances.